The Evolution of AI Art: From Glitches to Masterpieces

The journey of AI art has been nothing short of a technological miracle. Just a few years ago, "AI art" meant psychedelic swirls and terrifying, multi-eyed dogs generated by Google's DeepDream. Today, we have tools like Percify that can generate photorealistic portraits indistinguishable from a DSLR camera shot.

Phase 1: The GAN Era

Generative Adversarial Networks (GANs) were the first big leap. They worked by pitting two neural networks against each other—one trying to create an image, and the other trying to detect if it was fake. This gave us "This Person Does Not Exist," but it was limited. You couldn't tell the AI what to draw; you just got what it gave you.

Phase 2: Text-to-Image

The introduction of CLIP (Contrastive Language-Image Pre-training) changed everything. Suddenly, computers could understand the relationship between text and images. This allowed for the first generation of text-to-image models like DALL-E 1. They were blurry and abstract, but they proved the concept worked.

Phase 3: The Diffusion Revolution (We Are Here)

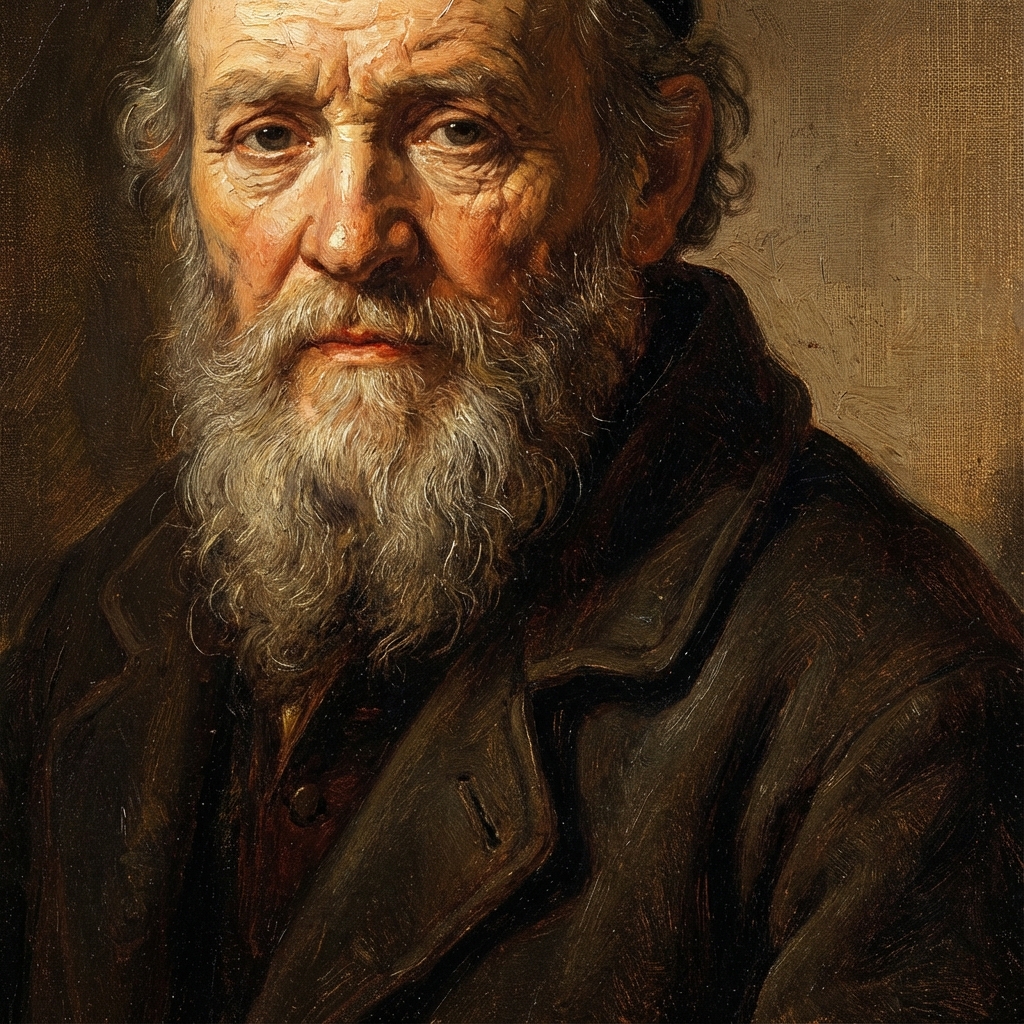

Diffusion models are the current gold standard. They work by starting with a canvas of pure static noise and slowly "denoising" it to reveal an image that matches your prompt. This allows for incredible detail, texture, and lighting control. Tools like Percify use advanced versions of these models, fine-tuned for specific aesthetics like anime, oil painting, or professional photography.